By: Husam Yaghi

Here is a step-by-step tested installation of multiple LLMs on your local machine with access to your defined local dataset; all through a single chat interface with a dropdown menu to select the desired LLM. My PC has only one GPU. I installed and ran: deepseek-r1:32B, Mistrral, Qwen, Gemma, and llama3.3

Step 1: Ensure System Requirements

Before proceeding, confirm the following:

- Operating System: Windows 10/11 or macOS.

- Python: Install Python 3.9 or higher from python.org.

- NVIDIA GPU (optional):

- If the user wants to run LLMs locally on their GPU, ensure they have an NVIDIA GPU with CUDA support.

- Install the CUDA Toolkit (version 11.8) and cuDNN if using PyTorch with GPU acceleration.

Step 2: Install Python

- Download Python 3.9 or higher from org.

- During installation:

- Check the box “Add Python to PATH”.

- Choose the option to install pip (Python’s package manager).

- After installation, verify Python is installed by running:

python –version

If python doesn’t work, try:

python3 –version

Step 3: Install Required Libraries

The script requires multiple Python libraries. Run the following commands in a terminal (Command Prompt, PowerShell, or macOS Terminal):

Command to Install All Required Libraries:

pip install torch torchvision torchaudio –index-url https://download.pytorch.org/whl/cu118

pip install transformers datasets PyPDF2 python-docx openpyxl python-pptx SpeechRecognition pyttsx3 psutil

Optional Debugging Commands (If Installation Fails):

If the above command fails, install individual libraries:

pip install PyPDF2

pip install python-docx

pip install openpyxl

pip install python-pptx

pip install SpeechRecognition

pip install pyttsx3

pip install psutil

pip install transformers datasets

# (For GPU users only)

pip install torch torchvision torchaudio –index-url https://download.pytorch.org/whl/cu118

Step 4: Install Ollama

- Download and install Ollama from https://ollama.ai.

- After installation, verify that Ollama works by running:

ollama list

Step 5: Download Relevant LLM Models

Run the following commands in your terminal to download the required models locally:

ollama pull qwen

ollama pull deepseek-r1:32B

ollama pull mixtral

ollama pull gemma

ollama pull llama3.3

Step 6: Organize the Directory Structure

Create the following directory structure on your PC:

plaintext

C:\

├── deepseek\

│ ├── main_gui.py # Place the script here

│ ├── cleanup.py # Optional system cleanup script

│ ├── prepare.py # Optional script for auto-installing.

│ ├── …

│

└── testDataSet\ # Place datasets here

├── file1.pdf

├── file2.docx

├── file3.xlsx

├── file4.pptx

Step 7: Run the Script

- Open a terminal (Command Prompt, PowerShell, or macOS Terminal).

- Navigate to the directory containing py:

cd C:\deepseek

- Run the script:

python main_gui.py

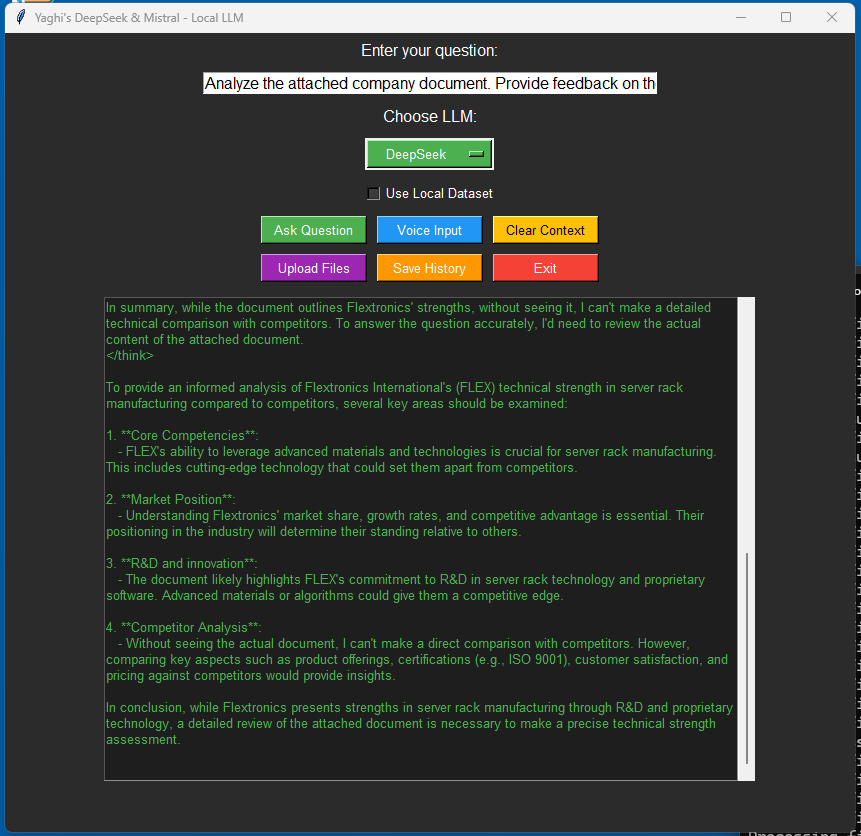

Step 8: Interact with the GUI

Once the GUI launches:

- Ask Questions: Type a question in the input field and choose a model from the dropdown.

- Voice Input: Click the “Voice Input” button to use your microphone.

- Upload Files: Add context by uploading PDFs, Word documents, Excel files, or PowerPoint slides.

- Save History: Save your question-response history using the “Save History” button.

- Clear Context: Clear all previously uploaded context with the “Clear Context” button.

Step 9: Optional Steps

System Cleanup (Optional)

Run cleanup.py to free up GPU memory, clear caches, and kill unnecessary processes:

python cleanup.py

Verify GPU Support

If using GPU, verify PyTorch can access your GPU:

python -c “import torch; print(torch.cuda.is_available())”

- If True, PyTorch is configured to use your GPU.

Troubleshooting

- No GPU Detected:

- Ensure the NVIDIA GPU drivers, CUDA Toolkit, and cuDNN are installed.

- Reinstall PyTorch with GPU support:

pip uninstall torch torchvision torchaudio

pip install torch torchvision torchaudio –index-url https://download.pytorch.org/whl/cu118

- Missing Libraries:

- Install missing libraries using:

pip install <library_name>

- Performance Issues:

- Use a smaller model (e.g., deepseek-1.5Binstead of deepseek-32B).

- Free system resources by running py.

- Ollama Model Not Found:

- Ensure the model is downloaded:

ollama pull <model_name>

Here is the complete Python script:

main_gui.py

make sure you have downloaded locally all the relevant LLMs which are using:

in a Power Shell execute:

ollama pull qwen

ollama pull deepseek-r1:32B

ollama pull mistral

ollama pull gemma

ollama pull llama3.1

ollama pull phi4

ollama pull qwen2.5

ollama pull codellama

def load_local_dataset():

import tkinter as tk

from tkinter import scrolledtext, filedialog

import subprocess

import threading

import speech_recognition as sr

import pyttsx3

import os

import glob

import PyPDF2

import docx

import pandas as pd

history = []

context_history = ""

engine = pyttsx3.init()

LOCAL_DATASET_DIR = "D:\yaghiDataSet"

def clear_context(result_text):

global context_history

context_history = context_history[-5000:] # Keep only the last 5,000 characters

result_text.insert(tk.END, "Context cleared. You can now start a new topic.\n\n", "info")

def save_history():

file_path = filedialog.asksaveasfilename(defaultextension=".txt", filetypes=[("Text Files", "*.txt")])

if file_path:

with open(file_path, "w") as file:

for entry in history:

file.write(f"Question: {entry['question']}\n")

file.write(f"Response: {entry['response']}\n\n")

def upload_files(result_text):

try:

file_paths = filedialog.askopenfilenames(

title="Select Files",

filetypes=[("PDF Files", ".pdf"), ("Word Files", ".docx"), ("Excel Files", ".xlsx"), ("All Files", ".*")]

)

if not file_paths:

result_text.insert(tk.END, "No files selected.\n\n", "info")

return

global context_history for file_path in file_paths: file_name = os.path.basename(file_path) extracted_content = "" if file_path.endswith(".pdf"): with open(file_path, "rb") as pdf_file: reader = PyPDF2.PdfReader(pdf_file) for page in reader.pages: extracted_content += page.extract_text() + "\n" elif file_path.endswith(".docx"): doc = docx.Document(file_path) for para in doc.paragraphs: extracted_content += para.text + "\n" elif file_path.endswith(".xlsx"): df = pd.read_excel(file_path) extracted_content = df.to_string() else: result_text.insert(tk.END, f"Unsupported file format: {file_name}\n\n", "error") continue context_history += extracted_content + "\n" result_text.insert(tk.END, f"Successfully added content from: {file_name}\n\n", "info") except Exception as e: result_text.insert(tk.END, f"An error occurred during file upload: {str(e)}\n\n", "error")

global context_history

try:

# Check if the directory exists

if not os.path.exists(LOCAL_DATASET_DIR):

return "Error: Local dataset directory does not exist."

# Read all text files, PDFs, Word documents, or other supported formats dataset_content = "" for file_path in glob.glob(f"{LOCAL_DATASET_DIR}/*"): file_name = os.path.basename(file_path) print(f"Processing file: {file_name}") # Debug: Log file name if file_path.endswith(".txt"): try: with open(file_path, "r", encoding="utf-8") as file: dataset_content += file.read() + "\n" except UnicodeDecodeError: # Fallback to another encoding if UTF-8 fails with open(file_path, "r", encoding="latin1") as file: dataset_content += file.read() + "\n" elif file_path.endswith(".pdf"): with open(file_path, "rb") as pdf_file: reader = PyPDF2.PdfReader(pdf_file) for page in reader.pages: dataset_content += page.extract_text() + "\n" elif file_path.endswith(".docx"): doc = docx.Document(file_path) for para in doc.paragraphs: dataset_content += para.text + "\n" elif file_path.endswith(".xlsx"): df = pd.read_excel(file_path) dataset_content += df.to_string() + "\n" else: print(f"Unsupported file format: {file_name}") # Debug: Log unsupported files dataset_content += f"Unsupported file format: {file_name}\n" # Add the dataset content to the global context_history context_history += dataset_content print(f"Dataset content loaded (size: {len(dataset_content)} characters)") # Debug: Log content size return "Successfully loaded local dataset." except Exception as e: return f"Error loading local dataset: {str(e)}"def voice_input(question_entry, result_text):

recognizer = sr.Recognizer()

with sr.Microphone() as source:

result_text.insert(tk.END, "Listening…\n", "info")

try:

audio = recognizer.listen(source)

question = recognizer.recognize_google(audio)

result_text.insert(tk.END, f"Recognized Question: {question}\n", "info")

question_entry.insert(0, question)

except sr.UnknownValueError:

result_text.insert(tk.END, "Could not understand the audio.\n", "error")

except sr.RequestError as e:

result_text.insert(tk.END, f"Speech Recognition Error: {str(e)}\n", "error")

def speak_response(response):

def tts():

global engine

try:

if engine._inLoop: # Check if the engine is already running

engine.endLoop() # End the current loop if necessary

engine.say(response)

engine.runAndWait()

except RuntimeError:

pass # Handle any runtime errors gracefully

tts_thread = threading.Thread(target=tts) tts_thread.daemon = True # Ensure the thread terminates with the main program tts_thread.start()def on_llm_submit(question_entry, result_text, use_local_llm, selected_llm):

question = question_entry.get().strip()

if not question:

result_text.insert(tk.END, "Error: Question cannot be empty.\n\n", "error")

return

result_text.insert(tk.END, f"Question: {question}\n", "question")

llm_choice = selected_llm.get() # Set the command based on the selected LLM if llm_choice == "DeepSeek-r1:32B": command = ["ollama", "run", "deepseek-r1:32b"] elif llm_choice == "DeepSeek-r1:1.5B": command = ["ollama", "run", "deepseek-r1:1.5b"] elif llm_choice == "Mistral (Latest)": # Add this block command = ["ollama", "run", "mistral:latest"] elif llm_choice == "Mistral:7B": command = ["ollama", "run", "mistral:7b"] elif llm_choice == "Llama3:70B": command = ["ollama", "run", "llama3:70b", "--cpu"] elif llm_choice == "Llama3.3": command = ["ollama", "run", "llama3.3:latest"] elif llm_choice == "Phi4": command = ["ollama", "run", "phi4:latest"] elif llm_choice == "Qwen2.5": # Add this block command = ["ollama", "run", "qwen2.5"] elif llm_choice == "Gemma": command = ["ollama", "run", "gemma:latest"] else: result_text.insert(tk.END, f"Error: Unknown LLM selected: {llm_choice}\n", "error") return # Debug: Show the selected LLM and command in the GUI result_text.insert(tk.END, f"Using model: {llm_choice}\n", "info") # Load the local dataset if "Use Local Dataset" is selected if use_local_llm.get(): result_text.insert(tk.END, "Using local dataset for the query.\n", "info") dataset_status = load_local_dataset() # Load and update context_history result_text.insert(tk.END, f"{dataset_status}\n", "info") # Run the LLM in a separate thread thread = threading.Thread( target=run_llm, args=(command, question, result_text, llm_choice) # Pass only 4 arguments ) thread.start()def run_llm(command, question, result_text, llm_name):

global context_history

try:

# Ensure context history size is manageable

context_history = context_history[-5000:] # Keep only the last 5,000 characters

full_input = f"{context_history}\nQuestion: {question}"

print(f"Context size: {len(context_history)} characters") # Debug: Log context size

print(f"Input to {llm_name}:\n{full_input}") # Debug: Log model input

# Run the LLM command process = subprocess.Popen( command, stdin=subprocess.PIPE, stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True, encoding="utf-8", ) stdout, stderr = process.communicate(input=full_input) # Process the model's output if process.returncode == 0: response = stdout.strip() if response: result_text.insert(tk.END, f"Response from {llm_name}:\n{response}\n\n", "response") speak_response(response) context_history += f"Question: {question}\nResponse from {llm_name}: {response}\n\n" history.append({"question": question, "response": response}) else: response = "I'm sorry, I couldn't find any information about Husam Yaghi." result_text.insert(tk.END, f"Fallback Response: {response}\n\n", "response") else: result_text.insert(tk.END, f"Error from {llm_name}:\n{stderr.strip()}\n\n", "error") except FileNotFoundError: result_text.insert(tk.END, f"Error: The command '{command[0]}' was not found. Is 'ollama' installed?\n\n", "error") except Exception as e: result_text.insert(tk.END, f"An unexpected error occurred while using {llm_name}: {str(e)}\n\n", "error")def cleanup_and_exit(root):

# Stop the pyttsx3 engine

global engine

engine.stop()

# Wait for all non-main threads to finish for thread in threading.enumerate(): if thread is not threading.main_thread(): thread.join() # Destroy the GUI root.destroy()

def create_gui():

root = tk.Tk()

root.title("Yaghi's LLM Interface")

root.configure(bg="#2b2b2b")# Bind the close event to cleanup_and_exit root.protocol("WM_DELETE_WINDOW", lambda: cleanup_and_exit(root)) # Title Label question_label = tk.Label(root, text="Yaghi's Local LLM Chat", bg="#2b2b2b", fg="white", font=("Arial", 16)) question_label.pack(pady=5) # LLM Dropdown Frame llm_frame = tk.Frame(root, bg="#2b2b2b") llm_frame.pack(pady=5) llm_label = tk.Label(llm_frame, text="Choose LLM:", bg="#2b2b2b", fg="white", font=("Arial", 12)) llm_label.grid(row=0, column=0, padx=5) selected_llm = tk.StringVar() selected_llm.set("DeepSeek-r1:32B") llm_dropdown = tk.OptionMenu( llm_frame, selected_llm, "Codellama", "DeepSeek-r1:32B", "DeepSeek-r1:1.5B", "Gemma", "Llama3:70B", "Llama3.3", "Mistral", "Mistral (Latest)", "Mistral:7B", "Phi4", "Qwen", "Qwen2.5" ) llm_dropdown.grid(row=0, column=1, padx=5) # Input field and "Use Local Dataset" checkbox in the same frame input_frame = tk.Frame(root, bg="#2b2b2b") input_frame.pack(pady=5) question_entry = tk.Entry(input_frame, width=50, font=("Arial", 12)) question_entry.grid(row=0, column=0, padx=5) use_local_llm = tk.BooleanVar() local_llm_checkbox = tk.Checkbutton( input_frame, text="Use Local Dataset", variable=use_local_llm, bg="#2b2b2b", fg="white", selectcolor="#3b3b3b", font=("Arial", 10) ) local_llm_checkbox.grid(row=0, column=1, padx=5) # Buttons button_frame = tk.Frame(root, bg="#2b2b2b") button_frame.pack(pady=5) tk.Button( button_frame, text="Ask Question", command=lambda: on_llm_submit(question_entry, result_text, use_local_llm, selected_llm), bg="#4caf50", fg="white", font=("Arial", 10), width=12 ).grid(row=0, column=0, padx=5) tk.Button( button_frame, text="Voice Input", command=lambda: voice_input(question_entry, result_text), bg="#2196f3", fg="white", font=("Arial", 10), width=12 ).grid(row=0, column=1, padx=5) tk.Button( button_frame, text="Clear Context", command=lambda: clear_context(result_text), bg="#ffc107", fg="black", font=("Arial", 10), width=12 ).grid(row=0, column=2, padx=5) tk.Button( button_frame, text="Upload Files", command=lambda: upload_files(result_text), bg="#9c27b0", fg="white", font=("Arial", 10), width=12 ).grid(row=1, column=0, padx=5) tk.Button( button_frame, text="Save History", command=save_history, bg="#ff9800", fg="white", font=("Arial", 10), width=12 ).grid(row=1, column=1, padx=5) tk.Button( button_frame, text="Exit", command=lambda: cleanup_and_exit(root), bg="#f44336", fg="white", font=("Arial", 10), width=12 ).grid(row=1, column=2, padx=5) # Result text area result_text = scrolledtext.ScrolledText( root, wrap=tk.WORD, height=30, width=90, font=("Arial", 10), bg="#1e1e1e", fg="white", insertbackground="white" ) result_text.pack(pady=10) result_text.tag_config("question", foreground="#ff9800", font=("Arial", 10, "bold")) result_text.tag_config("response", foreground="#4caf50", font=("Arial", 10)) result_text.tag_config("error", foreground="#f44336") root.mainloop()if name == "main":

create_gui()