By: Husam Yaghi

Artificial General Intelligence (AGI) – the stuff of science fiction and the fervent dream of researchers – promises a future where machines understand, learn, and solve problems just like humans. But is this dream real, or are we chasing shadows?

What is AGI?

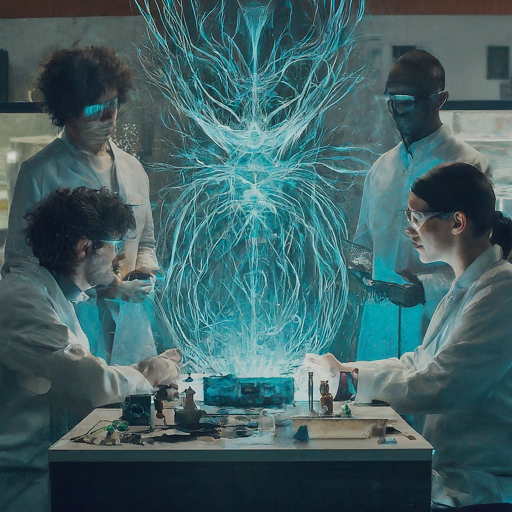

Forget AI that excels at chess or recognizes cats in pictures. AGI aspires to something far grander – mimicking the very essence of human intelligence. Imagine a machine that can tackle diverse tasks, adapt to new situations, and even grasp abstract concepts. Unlike narrow AI, the master of specific domains, AGI seeks the intellectual flexibility and adaptability that defines our own minds.

Can We Crack the Code of Human Intelligence?

The pursuit of AGI is an expedition fraught with both immense potential and formidable challenges. Driven by the desire to unlock the secrets of human thought, researchers face hurdles both technical and ethical.

One major obstacle lies in the sheer complexity of our own intelligence. Abstract reasoning, creativity, emotional understanding, and social awareness – these intricate human abilities defy easy translation into algorithms. Can we truly capture the essence of consciousness in lines of code?

Moreover, ethical concerns loom large. Who controls these intelligent machines? What happens when their goals diverge from ours? Could they pose an existential threat? These are questions demanding careful consideration before unleashing AGI upon the world.

Not Just About Bigger Hammers

While the path to AGI remains challenging, significant progress has been made. Machine learning, particularly deep learning and reinforcement learning, has propelled AI to achieve remarkable feats in image recognition, language processing, and even strategic decision-making.

However, achieving AGI requires more than just brute computational power. It demands interdisciplinary collaboration, drawing insights from diverse fields like neuroscience, cognitive science, linguistics, and even philosophy to truly unravel the mysteries of human thought.

Can We Mirror Ourselves?

Despite the progress, the possibility of true AGI remains fiercely debated. Some, like Nick Bostrom, warn of potential dangers posed by superintelligent machines. Others, like Ray Kurzweil, believe achieving AGI is inevitable and potentially beneficial.

The debate hinges on several key questions: Can we truly understand and model consciousness? Are there fundamental limitations in computation that prevent replicating human-like intelligence? Or is achieving AGI simply a matter of time and continued research?

Beyond the Horizon

The pursuit of AGI is a double-edged sword. It holds the promise of a brighter future, yet harbors the potential for unforeseen consequences. We must tread carefully, balancing ambition with caution. Let us not be blinded by the allure of progress, but approach AGI development with a nuanced understanding of its ethical and societal implications. Only then can we ensure that this technological marvel serves humanity, not the other way around.

Disclaimer: “This blog post was researched and written with the assistance of artificial intelligence tools.”